Measuring semantic similarity between documents has varied applications in NLP and Artificial sentences such as in chatbots, voicebots, communication in different languages etc. . It refers to quantifying similarity of sentences based on their literal meaning rather than only syntactic structure. A semantic net such as WordNet and Word Embeddings such as Google’s Word2Vec, DocToVec can be used to compute semantic similarity. Let us see how.

Word Embeddings

Word embeddings are vector representations of words. A word embedding tries to map a word to a numerical vector representation using a dictionary of words, i.e. words and phrases from the vocabulary are mapped to the vector space and represented using real numbers. The closeness of vector representations of 2 words in the real space is a measure of similarity between them. Word embeddings can be broadly classified into frequency based (eg: count vector, tfidf, co occurrence etc) and prediction based (eg: Continuous bag of words, skip grams etc.)

Various models can be used to learn these word embeddings. One such model is Word2Vec based on neural networks, which is used for learning vector space representations of words in huge corpus of text. It takes as input a huge corpus of text and produces a multi dimensional vector space and assigns a vector representation to each word in the corpus. Vector representations of words with similar context are closer in the vector space. Similarly, Doc2Vec is a model that learns vector representation of an entire document/paragraph.

Word2Vec can been used to calculate the Word Mover’s Distance for document similarity based on context of the word. WMD measures similarity between those sentences which have the same context but might not share similar words. We try to find the minimum travelling distance (shortest path) between two documents, i.e. the minimum distances to move the distribution of words of one document more closer to the other.

For eg: The President of USA greets the press in Chicago.

Obama speaks to the media in Illinois.

These two sentences have the same context but different words, so a traditional bag of words approach may not be able to detect the similarity of the two sentences because of lack of common words. However, WMD measures the similarity between them accurately. This can also be used to compute document similarity. *[2]

Reference: http://proceedings.mlr.press/v37/kusnerb15.pdf (Matthew J Kusner's paper "From Word Embeddings to Document Distances")

Wordnet

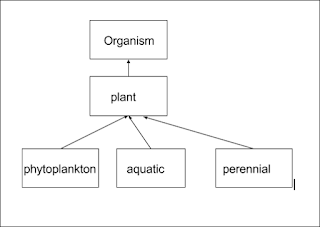

Wordnet is an English lexical database of synonyms. Words are grouped into synsets, i.e. sets of synonyms and are linked together by the semantic relationship that they define. This is a graphical structure with synsets being linked to other synsets to form a varied hierarchy of concepts. As we go deeper down this hierarchy, the relationships become more specific whereas at the top, the linking of synsets is quite general. For eg: oragnism is more general as compared to plant, etc. in the ficgure below.

Traditionally, the co-occurrence of words within documents has been used to measure similarity. More the number of common words any two documents share , the more similar they are. However, synonyms of a particular word or relationship between two entities has not been considered. The graphical structure of WordNet allows us to get the shortest path between two words and hence their semantic similarity . Some of the features based on WordNet used for measuring sentence similarity are:

- Path Length(L) and depth(D) *[1]: Similarity is some function of path length and the depth of the two words. Path length is the shortest path between the two words of the same synset and depth tells us the deepest subsumer of the two words. For eg:

So similarity(w1,w2) = f(L)f(D).

- Scaling Depth *[1]: The concepts at upper level of hierarchy are more general and the ones at the lower levels are more specific, so we need to scale down sim(w1,w2) at upper levels, and scale up sim(w1,w2) at lower levels.

- Sentence level similarity *[1]: A joint word set can be formed out of the distinct words between two sentences and for each word in the first sentence, similarity score of most similar word in the second sentence is taken and vectors s1, and s2 are formed for both sentences. Then we can simply compute the cosine distances between these two vectors to get the similarity score.

Using the above measures we can find document similarity based on context and meaning of a given document rather than using only corpus statistical measures like frequency of occurrence .

References:

1)http://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=1644735

2) https://www.analyticsvidhya.com/blog/2017/06/word-embeddings-count-word2veec/

3) https://en.wikipedia.org/wiki/WordNet

Comments

Post a Comment