In the earlier days of search engines, the major focus was on keywords and detection of these keywords across web pages. But in recent times, the focus has shifted from keywords based searches to conversational and relevancy measures.

Today, search engines like Google are not only focusing on matching the exact keywords given in the search query but to find the order of "relevancy" of the query. The idea is that if the engine understands what the query is about then the search results will not only match those web pages which contain the exact search query but also semantically same text which may be relevant to the query.

use of NLP in search engines makes getting the exact answer to the search query and easy task and resolves the amount of clutter a user has to go through to find the relevant material. NLP makes the search engine honour context of the query and its relation to the previous query.

Voice search is also responsible for the change in dynamics of Search engines.

The search queries from Voice search and from traditional textual search differ vastly in the structure and the results wanted.

This is more related to the task of Natural Language Understanding (NLU) which is a subset of NLP.

Two methodologies used for this task are finding the relevancy and Latent Semantic Indexing (LSI).

In this blog, I am going to introduce how the relevancy is modelled.

GloVe is an NLP algorithm developed in Stanford.

"GloVe is an unsupervised learning algorithm for obtaining vector representations for words. Training is performed on aggregated global word-word co-occurrence statistics from a corpus, and the resulting representations showcase interesting linear substructures of the word vector space."

it converts words to their vector representations along with the measures of co-occurrences. GloVe uses Nearest neighbours and Linear substructures. Nearest neighbours provide a metric to reveal an effective measure of rare but relevant words. for example, a frog can be is relevant (related) to words like frogs, toad, Litoria, Leptodactylidae, rana, lizard, eleutherodactylus.

Linear substructure differentiating between words

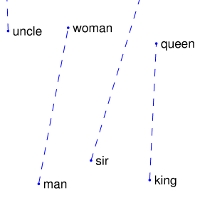

Linear substructure provides the measure to accurately distinguish between two words, the simple estimate is the vector difference between the two words.

vector representation of "Seo"

The above words are not necessarily related to "dui lawyer" but their relevancy is high in that one is likely to encounter these words in context of "dui lawyer".

representation in terms of vectors allows another advantage. We can manipulate words as vectors and in the same way we can do operations on these vectors.

The above image represents that the GloVe vector for Queen is very closely similar to that of King - Man and Women. This is beneficial for SEO as we can approximately obtain new words to search for using vector operations.

Conclusion:

NLP, even though a relatively newer entrant in search engines is already very powerful in modelling how to decompose a query and find relevant answers. Google HummingBird update deeply integrates NLP and NLU in the search algorithm and is already providing a better search experience. With supervised learning, relevancy measure, LSI and LDA, NLP is expected to completely overhaul the scenario of search and SEO in the coming years.

REFERENCES:

https://nlp.stanford.edu/projects/glove/

http://searchengineland.com/word-vectors-implication-seo-258599

https://contentequalsmoney.com/natural-language-the-next-big-thing-in-seo/

http://www.searchengineworkshops.com/articles/lsi-and-nlp-truths.html

Comments

Post a Comment